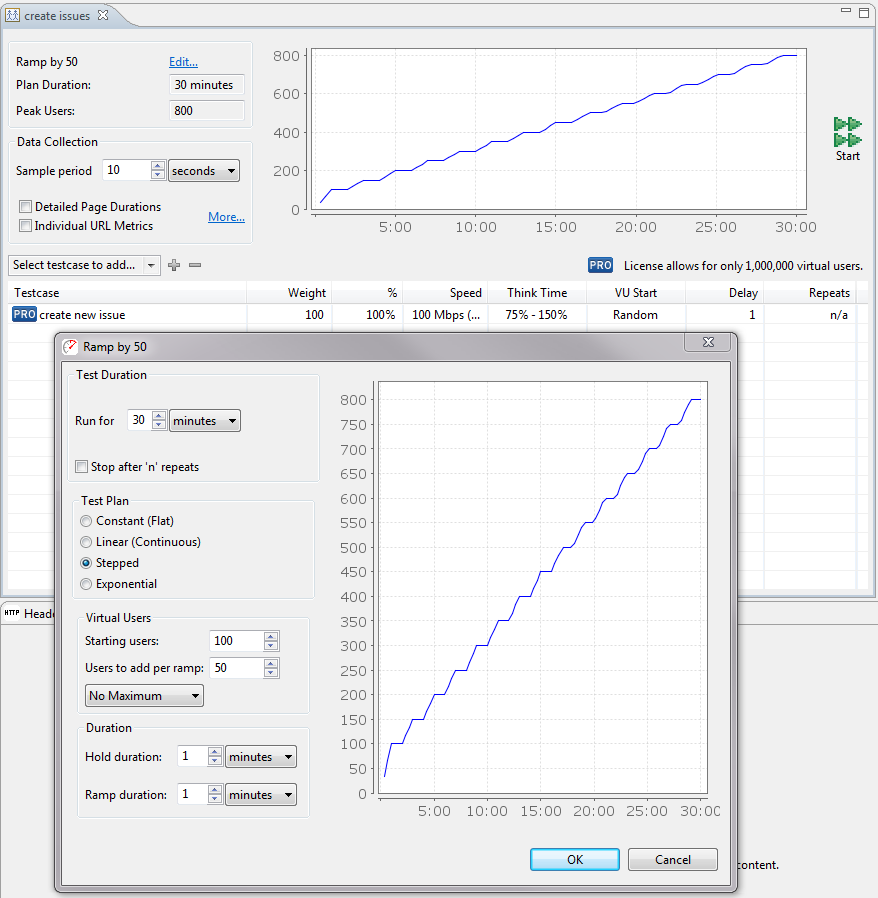

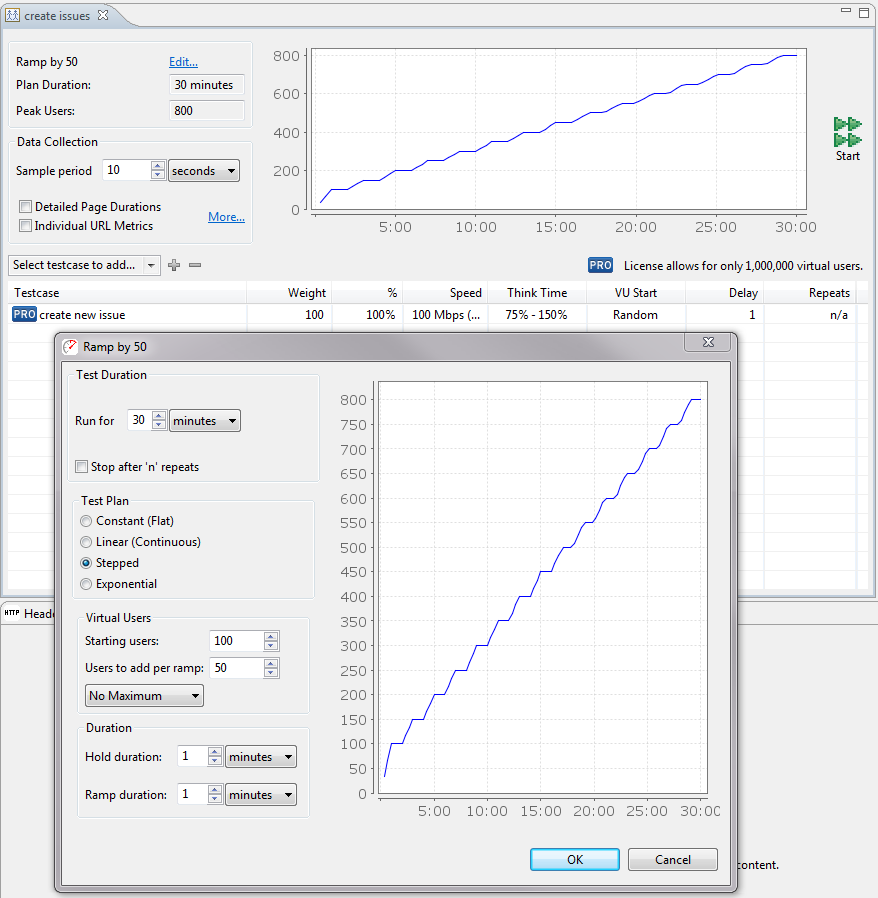

The Load Test Configuration Editor is used to configure a load test. A new load test configuration is created by right-clicking on an existing load test or the Load Test folder in the Navigator View and selecting the New Load Test Configuration item. A new load test configuration initially displays the last used configuration or the application defaults if no load tests have been configured. To open the Load Test Configuration Editor for an existing load test configuration from the Navigator View, you may either double-click the configuration or right-click on the configuration and select the Edit item.

The Load Test Configuration Editor contains three major configuration sections: Test Plan, Data Collection, and Testcases. While changing the configuration, if any fields contain invalid entries or any configuration errors are detected, a message is displayed immediately (shown below in red).

The Test Plan Editor allows you to specify how and when virtual users will be added to the load test.

The load test duration can be in units of hours or minutes. The duration of the test should change depending on your testing goals. If you are just trying to get an idea of the speed of certain operations on your site, useful performance information can be gained for tests that are a few minutes long. You can then tweak parameters in scripts or machine configuration and see if it has an effect on performance. If, however, you are trying to stress your web site to see if anything breaks, you will want to run the test over a longer period of time.

Alternatively, the "Stop after 'n' repeats" option will allow the test to run for as long as necessary for each testcase to be repeated as many times as specified in the testcase's "Repeats" column. This allows for each testcase to run a predetermined number of times, stopping the load test upon completion.

A constant test plan will briefly add in users until it reaches a steady state. This type of test plan is appropriate when you want to prove that a system can sustain load over a long time, for example, we might wish to show that a system can support 100 users for 12 hours.

A linear or continuous test plan will add users at a steady rate, indefinitely. This is the simplest type of test plan to set up, but not recommended in general.

A stepped test plan ramps by a fixed number of users at regular intervals, holding at a steady state between each ramp. This type of test plan is appropriate when measuring the effect of modest changes to a system, for example, if we know that a system supported 1000 virtual users last month, we might assess any change in system performance by running a load test from 500 users to 1500 users, adding 50 users every other minute.

An exponential test plan adds a percentage to the existing pool of virtual users with each ramp, holding at a steady state between each ramp. This type of test plan is appropriate when measuring the performance of a system for the first time, for example, if we suspect that an unfamiliar system can support somewhere between 100 and 10,000 virtual users, we might establish a baseline measurement of performance by starting at 100 virtual users and adding 25% every other minute until reaching 10,000.

Each test plan has its own specialized configuration options. You can see the most likely result of each test plan in the resulting graph. This graph can not take into account all possible variables that might affect the behavior of a load test, and is only a prediction.

It is possible to adjust a test plan after a load test has started, however, you may only add users, not remove them.

The sample period is the length of time over which metrics will be sampled before saving the values. This value should be shorter for short tests, and longer for long tests. For example, if your test only lasts an hour, then having samples every 10 seconds makes sense. If, though, your test is intended to run overnight, then the sample period should be much longer, in the area of 5 minutes. This helps make the data easier to interpret. When running extended tests, large amounts of data are collected - which could cause the program to run out of memory and halt the test prematurely. As a rule of thumb: when running a test for multiple hours, you should have sample periods that are on the order of minutes, while short tests can handle sample periods as small as 5 seconds.

The detailed page duration and individual URL metrics gather extra information during a load test.

Testcases are added to the load test using the pull-down menu located above the table listing all testcases in the load test. Select the desired testcase from the menu and click the '+' button to add the testcase to the load test. To remove a testcase from the load test, select the testcase in the table and click the '-' button.

Once a testcase has been added to the load test, the testcase can be configured by double clicking the appropriate entry in the table. The settings that can be modified are:

This setting limits the amount of data the simulated user can read or write to or from the server. The result is a more accurate simulation of expected server load. Accurate simulation of network speed for each user also results in a more accurate simulation of resource usage on the server - especially open network connections. For example, if your application generates a 40Kb graph, the browser might spend a fraction of a second to read the graph when connecting via a LAN, but could take up to 13 seconds when the browser is connecting over a modem. Having the socket open for 13 seconds instead of a fraction of a second puts a greater burden on the server - and can significantly influence the resulting performance measurements.

The load test cannot be run until the application detects there are no invalid entries or configuration errors. Once the load test configuration is valid, the Run button on the Load Test Configuration Editor is enabled. Selecting this button begins running the load test and opens the Load Test Results View.